How Significant is Significance Arithmetic?

Central to our mission at computerbasedmath.org is thinking through from first principles what's important and what's not to the application of maths in the real, modern, computer-based world. This is one of the most challenging aspects of our project: it's very hard to shake off the dogma of our own maths education and tell whether something is for now and the future, or if really it's for the history of maths.

This week's issue is significance arithmetic, similar to what you might know from school as significant figures. The idea is when you do a calculation not just a single value but bounds that represent the uncertainty of your calculation too are calculated. You can get an idea of how accurate your answer is or indeed if it has any digits of accuracy at all.

How important is the concept of significant figures to applying maths? And if useful, what of the mechanics of computing the answer? Is significance truly significant in concept and calculation for today? And therefore should it be prominent in today's maths education?

Here's our surprising conclusion. Significance arithmetic should be far more significant than it is in real-life maths today, rather like its role (if not all the detail) in maths education where it is covered fairly extensively. It would really help to get good answers, far fewer misinterpretations, a view on whether any of the numbers are justifiable. But people just aren't using it much in their maths lives.

Yes, paradoxically, I'm saying that this is a case in which traditional maths education got it right(er) and real-world maths didn't know what was good for it! Education is ahead!

So, what's gone wrong with significance out there?

Let's start with traditional notation. If you write down 3.2, it's in practice unclear if you're being precise at justifiably saying "2 significant figures" or if really those were what came to hand or all you could be bothered to calculate by hand. The notation (like so much of traditional maths notation) is ambiguous, causing huge confusion to practitioners and particularly students.

Then there's how calculators and computers usually operate. They churn out whatever their hardware "machine precision" is--often 16 or 19 digits--even if it has no justification from input or calculation. People ignore the digits or if transcribed, just think those quoting them are ill-educated (rather like the misuse of apostrophes suggest poor education in English).

When you use significance arithmetic there are several stages that can trip you up. You have to be clear what your input precision really is. What is the error I'm inputting (eg. does 2 digits represent this)? But then the calculations you do dramatically change what significance can be justified coming out. For example, a steep slope of a function reduces relative significance coming out (eg. to 1 digit, because a little variation in the x value results in a big y change), whereas a small gradient can increase justifiable significant digits (eg. to 4 digits). That means it really matters not only which functions you're computing with, but where you are on the function.

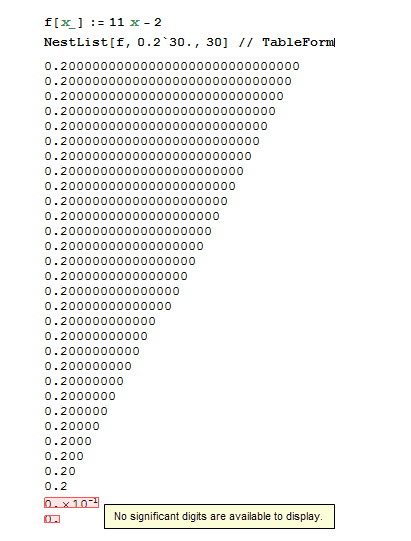

Screen-capped from an old video: Comparing how the output precision of a computation can vary widely even for a simple function where the input precision is being kept constant.

This is messy, time-consuming and tedious to handle by hand. And yet most numerical computation software (or hardware) doesn't do significance arithmetic.

The result is predictable: significance arithmetic is usually too hard to use so people don't bother. But rather than this cutting out significance in education, it cuts it out in the real-world but with the effect that lack of accessible computing usually has in education. Computing significance in and out of education has traditionally been much too complex to bother with.

Mathematica is an exception. Right from the beginning we've had significance (and big number) arithmetic. We invented a notation using ` to specify input precision; all relevant functions compute significance and output only what's justified. Some (eg. for numerical differential equation solving) even step up precision sufficiently during calculation to meet a goal you have set for output precision, assuming this isn't trumped by too lower a precision of input.

We have fun demoing a collapse of significance problem in Excel v. Mathematica. At each iteration the result should be 0.2 but the precision is constantly reducing. Excel goes way out after just 20 steps with no warning.

Excel does not track significant digits and very quickly produces nonsensical answers. Easy to spot the failure of significance in this simple example; potentially disastrous inside a more complex model.

Starting with 30 significant digits input to Mathematica, it tracks and displays reducing justifiable digits, until an error box alerts to a complete loss of significance.

We've picked on Excel here, but almost any numerical software will behave somewhat similarly on an example like this.

Yet if you use the right numerical tools for the job, significance arithmetic take on its true significance.

Back to CBM and how significance should or shouldn't figure in what's covered? Our view is that the failure of widespread adoption of significance in real-life isn't because its worth has been superseded or mechanised away, but to the contrary, because the computing (with some notable exceptions!) has yet to make it easy enough for the apparent benefit. That will change, and hopefully before our students graduate. So we're voting for more significant significance!

One final point. This is but one example of thousands across all areas of the maths curriculum that require deep rethinking for fundamental reform of the subject of maths. I hope it gives a flavour for why CBM is so hard a project to do thoroughly and why it requires so broad a view of the use of mathematics and state of computing technology.